Caltech Engineers have created software capable of assessing reactions to a movie using the audiences' facial expressions.

Developed by Disney Research in collaboration with Yisong Yue of Caltech and colleagues at Simon Fraser University, the software relies on a new algorithm known as factorized variational autoencoders (FVAEs).

FVAEs automatically translate images of complex objects, like faces, into sets of numerical data. The contribution of Yue and his colleagues was to train the FVAEs to incorporate metadata or other pertinent information about the data being analyzed.

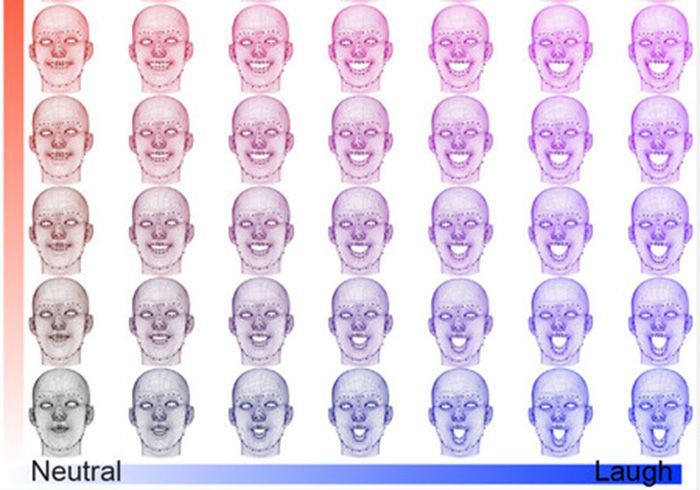

In this case, FVAES uses images of movie-watchers' faces and breaks them down into a series of numbers representing specific features: one number for how much a face is smiling, another for how wide open the eyes are, etc.

Metadata then allow the algorithm to connect those numbers with other relevant bits of data—for example, with other images of the same face taken at different points in time, or of other faces at the same point in time.

With enough information, the system can assess how an audience is reacting to a movie so accurately that it can predict an individual's responses based on just a few minutes of observation, says Disney research scientist Peter Carr.

But the technique's potential goes far beyond the theater, says Yue, assistant professor of computing and mathematical sciences in Caltech's Division of Engineering and Applied Science.

"Understanding human behavior is fundamental to developing AI [artificial intelligence] systems that exhibit greater behavioral and social intelligence. For example, developing AI systems to assist in monitoring and caring for the elderly relies on being able to pick up cues from their body language. After all, people don't always explicitly say that they are unhappy or have some problem," Yue says.

The team will present its findings at the IEEE Conference on Computer Vision and Pattern Recognition July 22 in Honolulu.

"We are all awash in data, so it is critical to find techniques that discover patterns automatically," says Markus Gross, vice president for research at Disney Research. "Our research shows that deep-learning techniques, which use neural networks and have revolutionized the field of artificial intelligence, are effective at reducing data while capturing its hidden patterns."

The research team applied FVAEs to 150 showings of nine movies, including Big Hero 6, The Jungle Book, and Star Wars: The Force Awakens. They used a 400-seat theater instrumented with four infrared cameras to monitor the faces of the audience in the dark. The result was a data set that included 68 landmarks per face captured from a total of 3,179 audience members at a rate of two frames per second—yielding some 16 million individual images of faces.

"It's more data than a human is going to look through," Carr says. "That's where computers come in—to summarize the data without losing important details."

The pattern recognition technique is not limited to faces. It can be used on any time-series data collected from a group of objects. "Once a model is learned, we can generate artificial data that looks realistic," Yue says. For instance, if FVAEs were used to analyze a forest—noting differences in how trees respond to wind based on their type and size as well as wind speed—those models could be used to create an animated simulation of the forest.

In addition to Carr, Deng, Mandt, and Yue, the research team included Rajitha Navarathna and Iain Matthews of Disney Research and Greg Mori of Simon Fraser University. The study, titled "Factorized Variational Autoencoders for Modeling Audience Reactions to Movies," was funded by Disney Research.

By Robert Perkins

Neural Networks Model Audience Reactions to Movies was originally published on the Caltech website.