Combating sexual assault and misconduct on campus is a complex and on-going process. As illustrated in this report, institutions are taking a multi-pronged approach and utilizing different data collection, response, and training and educational methods.

There is no single established best method in any area, nor any one-size-fits-all approach. Part of the task for universities is to measure changes in response to the steps they are taking. Those changes include but are not strictly limited to prevalence rates: they also include knowledge and utilization of campus resources, satisfaction of those who use campus resources, reduction of barriers to reporting, efficiency and effectiveness of training and educational programs and adjudication processes, long-term health of victims, and many other factors.

Understanding what constitutes success is not always straightforward. For example, an institution that successfully lowers barriers to reporting may witness a seeming increase in prevalence rates due to a higher percentage of incidents being reported. Teasing out the full picture may be complex and require contextualization.

With that in mind, institutions are measuring the effectiveness of the steps they are taking. Many institutions monitor counts of relevant behaviors (e.g., reports of misconduct, both formal and informal; police, alcohol, and administrative board incidents) on a regular basis. They also frequently collect program evaluation data to assess the effectiveness of campus resources.

More than half of responding institutions (30/55) most recently collected this information in the current term, and 82% (45/55) have collected it within the past academic year. Measuring Change – Figure 1 and Figure 2 show when most recent data collection for program and resources evaluation was conducted, and illustrate that such data collection is on a different, more frequent, schedule than conducting student surveys on prevalence and climate.

84% (46/55) of institutions said they were developing new or improved ways of measuring the effectiveness of policies, programs, and interventions.

Several of those who said they were not yet doing this mentioned that systems are getting underway for them to be able to do so in the near future. Institutions are using various mechanisms for measuring effectiveness.

Universities are evaluating effectiveness in part through gathering student opinion and feedback. This includes surveying students and conducting focus groups. It also includes assessing the satisfaction and outcomes for students who use particular campus resources. For example, at Princeton University, the Sexual Harassment/Assault Advising, Resources and Education (SHARE) office has implemented a client satisfaction questionnaire for victims, including items assessing the impact of the service on the student’s academic capacity, ability to stay enrolled, relationships, thoughts and feelings about self and the specific interpersonal violence issue. Another client satisfaction questionnaire is used for students attending SHARE’s post-adjudication program for respondents found responsible for Title IX violations.

The University of Iowa asks for feedback from individuals who utilize the Office of the Sexual Misconduct Response Coordinator to learn of their complaint options, accommodation options, and support resources. Pennsylvania State University is studying how much the student body knows about Title IX, and what the term “Title IX” signifies to them.

Universities are evaluating effectiveness by looking directly at trends. For example, Northwestern University plans to compare its 2015 campus climate survey data against the next campus climate survey data (to be conducted in 2017 or 2018) to help measure the effectiveness of programs and any change in the awareness of resources. Northwestern has also overhauled how it measures the effectiveness of the in-person interactive theater presentation/training required of all new undergraduate students. The advocacy program for victims is routinely assessed for student satisfaction and effectiveness.

One of the proposed methods for measuring the effectiveness of the policies, programs, and interventions related to sexual violence that are available on campus is through the adaptation of the Level of Exposure Scale, developed by the Center on Violence Against Women and Children (VAWC) at Rutgers University–New Brunswick. The scale is designed to effectively measure students’ awareness of specific programming or resources regarding sexual violence available on a particular campus. The scale can, therefore, be tailored to different campuses.

This scale was first used on the Rutgers–New Brunswick campus climate survey, and provided a baseline measurement of awareness of on-campus sexual violence programming and resources. To measure whether students’ awareness of resources has changed following the implementation of the action plan, researchers can adapt the scale to include additional programming and resources that have been administered as part of the action plan. The scale can also be used to measure students’ awareness of action planning activities to see whether students are aware of the events that are occurring on campus.

Institutions are developing new assessment mechanisms to measure program effectiveness. For example, Cornell University conducted a randomized controlled trial evaluating the effectiveness of its new bystander intervention video, Intervene, as a stand-alone intervention among undergraduate and graduate students. It also conducted a pilot evaluation of the accompanying workshop among undergraduate students. After four weeks, students who watched the stand-alone video reported a higher likelihood to intervene for most situations compared to a control group who did not view the video. The workshop was effective at increasing students’ likelihood to intervene for most situations as measured in the four-week follow-up survey.

Pennsylvania State University is developing assessment instruments to measure effectiveness of specific programs such as online modules for incoming students, New Student Orientation programming, bystander intervention initiatives, Center for Women Students programming, and Title IX training and education.

Some institutions have used centralization and standardization to make it easier to measure the effectiveness of actions taken. For example, the University of Iowa’s Anti-Violence Coalition developed a two-year plan in response to climate survey data, evidence-informed practices, and best practice recommendations for preventing sexual misconduct, dating violence, and stalking. The plan includes identifying and exploring ways to centralize the evaluation of all student prevention education programs through coordination in the Office of the Vice President for Student Life. The intent is to identify measures of success that can be tracked across all prevention programs, building on a current tracking and assessment tool.

Institutions are conducting pre-/post-evaluations of particular actions, changes, or interventions. For example, Michigan State University’s Sexual Assault and Relationship Violence (SARV) Prevention Program has conducted pre- and post-tests of its programs for several years. The SARV program recently created an attendance and effectiveness tool that shows the development and reach of the program. Additionally, MSU is in the early stages of an evaluation and realignment of its student health and wellness functions. New and innovative strategies for sharing information and evaluating program effectiveness are likely to emerge from this process.

The University of Colorado Boulder will conduct pre- and post-evaluations to measure the effectiveness of prevention interventions designed to increase reporting, build bystander skills intended to reduce the incidence of sexual assault, and build skills for supporting a friend who has experienced a traumatic event. The campus also plans to implement an evaluation program for understanding the experiences of students who formally report an incident of sexual misconduct to the university.

Universities are also participating in multi-institutional evaluations of training programs. For example, many responding institutions are involved with Green Dot trainings, particularly for bystander intervention. As noted earlier, nine universities specifically mentioned Green Dot in their responses, and 25 of 61 institutions that provided examples of their activities for this report are listed as having certified Green Dot instructors.

Green Dot has been rigorously evaluated for its effect in increasing active bystander behavior and reducing interpersonal violent victimization and perpetration rates. For example, Ann Coker and her colleagues compared undergraduate students attending a college with the Green Dot bystander intervention with students at two colleges without bystander programs. They reported that violence rates in the past academic year were lower on campuses with Green Dot than in the comparison campuses for unwanted sexual victimization, sexual harassment, stalking, and psychological dating violence victimization and perpetration.

Underway currently is a Multi-College Bystander Efficacy Evaluation (McBee), funded by the Centers for Disease Control (CDC), that will define and evaluate the relative efficacy of different components of bystander intervention training programs, including Green Dot, to increase bystander efficacy and behaviors, reduce violence acceptance, and reduce interpersonal violent victimization and perpetration among college students. At least four of the institutions that responded to the survey are participating in the McBee study, and the results will be broadly applicable across institutions.

Institutions are particularly interested in assessing changes in the campus community’s knowledge about and utilization of campus policies and resources related to sexual assault and misconduct.

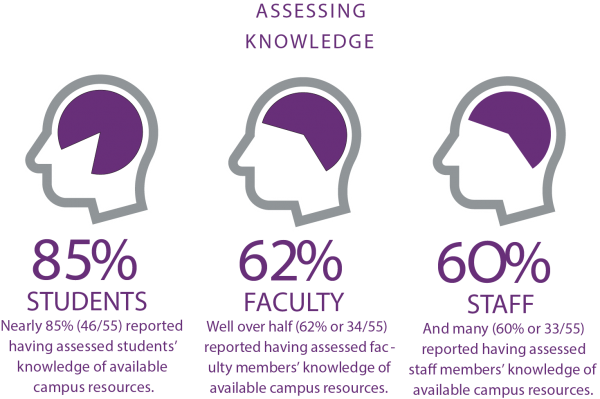

84% (46/55) of institutions reported assessing students’ knowledge about and utilization of policies and resources, and well over half are assessing faculty (62% or 34/55) and staff (60% or 33/55) knowledge.

Universities are examining changes in knowledge and utilization of policies by looking at repeated surveys or online assessments/training and by comparing different sources of data. A point many institutions made in their responses is that the first survey administration constitutes a baseline from which to measure change.

Northwestern University’s campus advocacy office for victims and Title IX office measure utilization of their resources each year to assess changes in reporting and awareness of resources. In addition, faculty, staff, and graduate students may complete a survey after taking the online training course that measures whether the online training participants feel they are more informed about sexual misconduct issues, how to prevent sexual misconduct, and what the options are for those who have experienced it.

Rutgers University–New Brunswick will be conducting additional assessments in the next one to two years to measure the changes in knowledge about policies and resources. Also, the Student Affairs Compliance & Title IX Office, which is responsible for responding to all reports of sexual violence involving students, regularly collects data regarding utilization of its processes and will be assessing the data within the next year.

In addition to ongoing efforts to enlist student engagement and feedback about their knowledge and perception of campus policies and resources, has worked to compare responses to the 2015 AAU Campus Climate Survey to the responses in its prevention programs (nearly identical questions about institutional trust, awareness of resources). At first glance, students who completed the first-year prevention programs had much higher levels of knowledge about available resources for victims and more favorable perceptions of campus policies than the non-first year students who completed the AAU survey (and had either never completed a prevention and policy disclosure program requirement or had done so at least one year prior).