This document is intended as a resource for College and University decision-makers as they determine which activities to undertake on their campuses related to understanding and preventing sexual assault and misconduct, with full recognition that each institution has a different context and that no single approach is a solution for all.

Introduction

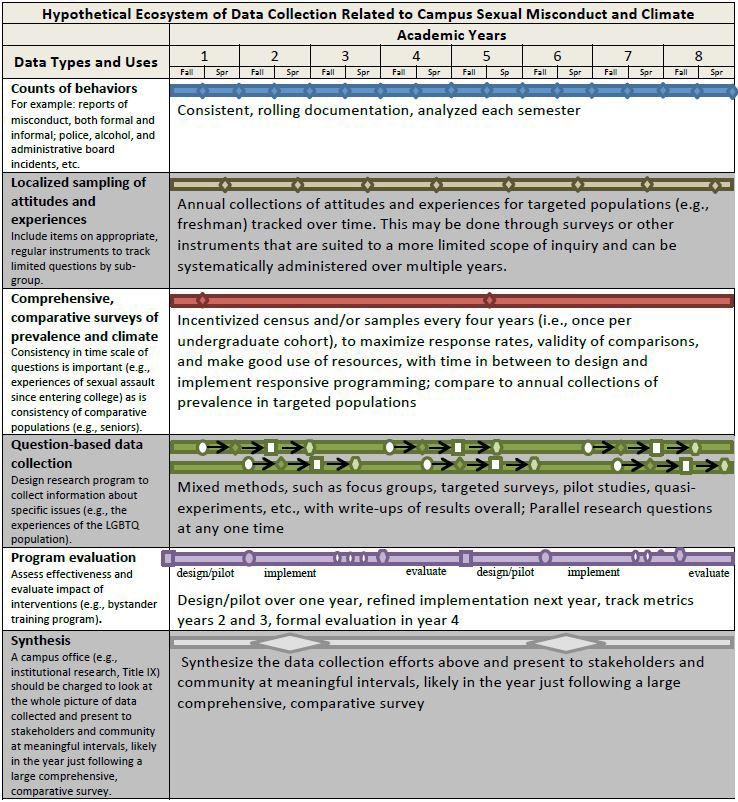

We argue that institutions aspire to a “data ecosystem” approach to collecting data about sexual misconduct and assault rather than rely too heavily on any one data collection effort. This approach emphasizes synthesis across data sources as a goal. One implication of this approach is that large-scale comparative surveys are best conducted with at least four years between iterations, and complemented by many other data collections.

In recent years, a number of higher education institutions have completed sexual assault and climate surveys to better understand the scope and nature of campus sexual misconduct (e.g., the AAU Campus Climate Survey on Sexual Assault and Sexual Misconduct, the Rutgers iSPEAK Pilot Campus Survey, the RTI instrument used in the Campus Sexual Assault Study). Now these colleges and universities are investing in organizational changes to address the results of those surveys and are in the process of making decisions about the scope and timing of the next round of surveys.

National conversations about how to assess campus culture meaningfully have focused on omnibus, large- scale campus prevalence rate surveys. We suggest here that instead of focusing solely on large-scale prevalence rate surveys, institutions may want to take a multi-faceted approach, setting as an aspirational goal the establishment of an “ecosystem” of relevant data collection efforts that incorporates the use of both qualitative and quantitative methods.

Consistent with a public health approach, this treatment of a complex issue also would align more clearly with the intricacy of student experiences and attitudes with respect to sexual misconduct on a particular campus. This multi-faceted effort requires detailed coordination to make the best use of a campus’s resources and indeed, to make the best use of the data collected. Although each mode of data collection has strengths and limitations, a “mixed-methods” approach may be appropriate on many campuses and could maximize the understanding gained from investments in time and other resources.

As members of institutions that have undertaken climate surveys, we believe that widespread, systematic data collection is a critical part of any effort to address the pervasive and troubling occurrence of sexual assault and all forms of sexual misconduct on our campuses. We acknowledge that working toward broader and more effective data collection in this topic area presents a major challenge to our institutions. Surveys that are not based on sound evidence or tailored to individual campuses may provide institutions with insufficient information to continue the hard work of culture change.

We also offer guidance regarding the optimal interval between iterations of large-scale prevalence rate surveys, with a view toward tailoring the way that these surveys fit within the larger data collection ecosystem. An institution’s decisions about the intended uses of survey data have significant methodological, practical, and programmatic implications. These, in turn, lead to insights regarding the ideal timing of different data collection methods, including large-scale prevalence rate surveys. We provide rationale in the body of this document for three overarching considerations while making decisions about the interval between campus sexual climate surveys:

- Large-scale surveys are but one critical data collection mechanism within an ecosystem of other important, related data collection activities. Other data collection efforts that are institution- and program-specific should be undertaken in an ongoing way. Such data collection efforts are in fact taking place on most campuses already. They might include surveys, counts of incident reports, assessment of programmatic interventions, focus groups, and other projects, which complement large, comprehensive surveys.

- An interval of once every four years for large-scale, comparative surveys will provide institutions the necessary time, flexibility, and resources to conduct other important data collection efforts, including the evaluation of practices and programs related to campus climate and sexual misconduct. Further, this four-year time frame will increase the likelihood of achieving:

- Valid comparison populations (new cohorts of college students in particular);

- High response rates;

- Time to draw on initial results to make changes on campus;

- Good cost/benefit ratio for using limited institutional resources (e.g., on surveying versus other ways to address sexual assault and misconduct);

- Sufficient lead-time to plan and implement an increasingly useful large-scale comparative

survey; and - Meaningful target metrics to understand the results of interventions.

- Peer institutions should work together to plan survey strategies and coordinate the use of shared, institutionally appropriate questions. Individual institutions must retain the ability to develop and ask only the survey questions that make sense for their own communities, but campuses should explore robust partnership across institutions. This type of collaboration allows for understanding of local prevalence rates and climate metrics within a broader context, helping immeasurably to interpret and act on results. We outline ways that institutions can work together to increase the power of the data they collect on their respective campuses.

An Ecosystem of Data Collection

Although large-scale surveys of prevalence rates should be viewed as an important step toward a full assessment of campus climate, they are too often treated as endpoints in culture change. This view obscures the importance of mixed assessment methods and risks hindering the campus culture change that these surveys are meant to spur.

A campus-wide, omnibus prevalence rate and climate survey can be a major precipitating force in a cycle of in-depth, systematic campus climate assessment. This type of survey is not, however, designed to measure program effectiveness directly. Nor is it suited to measure short-term changes in prevalence rates.

To maximize campus resources and the effectiveness of large-scale prevalence rate surveys, these surveys should dovetail with other data collection activities, many of which are taking place on campuses but perhaps not examined in coordination with larger efforts. For a set of issues as complicated as sexual assault and misconduct, it makes sense to triangulate, use descriptive metrics, regression analyses, qualitative summaries, and other tools to address different aspects of these problems.

All campuses have diverse data sources that are collected regularly and could be synthesized. But many campuses find it difficult to build robust, interconnected data practices that allow for systematic and relatively automated examination of trends around important metrics. Below, we give an example of how data collection regarding campus sexual misconduct could be organized to make the best use of campus resources. There are many types of data that can be collected and used, each with different attributes and collection timelines, but drawing largely on overlapping resources.

Benefits of the Ecosystem Approach

Taking an ecosystem approach on a sustained basis encourages a campus to coordinate data collection projects and synthesize the multiple types of data that result from a mix of institutional studies. In this way, it facilitates more effective use of institution-specific campus data and resources, as compared with pursuing only one type of data, or with collecting multiple types of data but viewing each type of data in isolation. Taken alone, any one of the types of studies represented below could give useful data to a campus. Used in combination, multiple research methods provide administrators access to actionable information about their campus climate that would not be accessible by means of any single data source.

Running a large-scale quantitative survey can give administrators access to a wealth of data, but the presence of high-quality quantitative data is not enough for an institution to understand the richer context of survey responses. For example, a campus could find in their data that students are not knowledgeable about where they should go for help if they experienced sexual misconduct on campus.

This is a valuable piece of information, but does not tell administrators why students felt uninformed about the institution’s resources. As a follow-up study, the school could run a separate, more qualitative study of campus culture, such as with focus groups that target particular groups of interest, to give administrators a better understanding of how they should address the gap in student knowledge they found in the initial quantitative survey.

As a result, administrators could find that students are aware of all of their options, but unsure of which one to contact first. Alternatively, they could find that students are confused about which campus resources to contact depending on the type of sexual misconduct they have experienced. Aligning multiple research methods in this way would give administrators better insight into where additional resources would be most effective in improving student awareness of campus resources.

An ecosystem approach also embraces simultaneous campus research projects geared toward understanding separate communities that might have very different concerns, for example, undergraduate versus graduate and professional students. The AAU aggregate survey data, for example, showed that, across institutions, undergraduates experienced various types of sexual misconduct at very different rates compared to graduate and professional students. And at some participating institutions, undergraduates and graduate/professional students responded most strongly to different aspects of the survey results. For example, undergraduates emphasized concern with sexual assault rates while graduate and professional students emphasized concern with sexual harassment by faculty. Different research initiatives would be required to delve further into these different areas of concern. It would be feasible to carry out such initiatives simultaneously, provided a collective effort to tailor campus resources to smaller-scale, coordinated projects.

As we have all observed, there are tradeoffs involved in decision-making about data collection. For example, if a survey is too long, response rates tend to go down. It is typically not advisable to ask students to fill out many open-ended, qualitative survey questions in addition to a long series of closed-ended survey items. The goals of gathering rich qualitative responses and banks of quantitative surveys, therefore, are likely to be better served if not combined. When they are combined, data quality can suffer.

Similarly, either quantitative or qualitative data collected using different methods may yield contradictory results. Indeed, given the complexity of the underlying issues involved, diverse results may be expected. An ecosystem approach will aid in making tradeoffs, and in the deeper understanding demanded by ongoing data collection to get at meaningful interpretations of initially contradictory findings.

If campuses come to a better understanding of how their existing and planned data sources are connected, it will enable an increasingly systemic, and therefore powerful, approach. While aspirational for most campuses, we believe this approach has the potential to benefit additional areas of campus life.

Just as schools increasingly perform data collection to study sexual misconduct, they also pursue data regarding a variety of topics that are of great interest to our communities and other bodies. These are topics such as cultural diversity, mental health, and academic support. In light of the variety of data sources that we ask students to contribute their time and energy to populating (e.g., by filling out surveys or participating in focus groups), it is increasingly important that administrators coordinate these varying data collection efforts within a campus. This will help to limit interference among simultaneous data activities and help to maximize campus resources.

Rationale for a Four-Year Cycle

The logic for a four-year interval between large-scale prevalence rate surveys has multiple, interrelated components. Even at the most well-resourced campuses, the steps required for a successful campus-wide prevalence rate survey take time: initial design, pilot test, finalize design, advertise survey, field survey, manipulate and analyze survey results, communicate findings to all stakeholders, utilize results to inform programs and practices. At a minimum, the process of preparing a campus for this type of survey takes well over a year. Even if a campus has a survey instrument available, survey administration is a lengthy process. Experienced institutional researchers suggest it takes two years to plan, administer, and analyze the results of a large survey like this, plus an additional 1-2 years to communicate results to all corners of a campus and work with stakeholders to determine how to use those results most effectively. In addition to significant time demands, there are substantial methodological, practical, and ethical considerations that justify an interval of four or more years between iterations of a large-scale campus prevalence rate survey.

Methodological considerations

Importance of having high response rates. Findings may not be representative of the campus community unless a large fraction of sample members participate. A high response rate is critical for allowing analysts to calculate statistically reliable estimates and drill into the data set for insights about the student experience. For example, some schools had such high response rates to their campus sexual climate surveys that analysts were able to generate prevalence rate estimates for demographic groups that are not typically well-represented in smaller samples (e.g., male students).

The significant efforts required to yield high response rates to a campus-wide survey would be virtually impossible to repeat on an annual or biannual basis. One solution to reconcile this reality with a desire or compliance-based need for more frequent campus climate surveys is to consider a sampling approach, in which a representative sample of students are invited to take the survey, as opposed to a census approach, in which all students are invited to take the survey. Some sexual assault and misconduct surveys have been designed for such a sampling approach. On the other hand, students on some campuses have argued against a sampling approach and advocated strongly for the census approach, on the grounds that all students should have the opportunity to voice their views and experiences regarding sexual assault and climate. In addition, institutions may want to analyze the relative pros and cons of census versus sampling methodologies on their campuses in terms of expense and time. Regardless of whether institutions adopt a sampling or census approach, ensuring representativeness is very important for interpretation of results. When using either approach, the methodological, practical, and ethical considerations discussed below are relevant.

Survey fatigue and completion of questions. Data quality suffers with increased survey frequency, typically because response rates become lower as survey populations become inundated with surveys and tire of participating in them. Frequent surveys stretch the goodwill of the student body to volunteer for surveys, whether participants are paid or not. Colleges and universities administer many different surveys per year, in addition to efforts to measure campus sexual climate. Survey fatigue can damage data quality across multiple topics an institution wishes to measure. For example, on some campuses, the administrative focus on the survey of sexual assault and misconduct was directly associated with a marked decrease in participation in other cyclical campus surveys, which those campuses relied on heavily for other aspects of their academic mission (e.g., measuring student satisfaction with academic advising resources). At those schools, high response rates and resultant high data quality on campus sexual climate surveys came at the cost of data quality – and therefore data-driven decision-making capability – in other topic areas.

Insufficient time to measure changes in target metrics. While prevalence rates might provide indirect evidence of the progress that a campus is making toward a healthier climate, that progress is unlikely to proceed fast enough to be registered annually or even biannually. Results can be difficult to interpret if insufficient time has elapsed for the designated metrics (i.e., prevalence and incidence rates) to change prior to re-measurement. Longer intervals between surveys make better use of campus resources as they are more likely to capture evidence of change as it occurs. Difficulties inherent to short intervals between measurements of prevalence rates are compounded by the fact that the personnel dedicated to administering these surveys are also frequently tasked with implementing programmatic changes in response to the survey results. Short intervals between surveys require personnel to shift focus back to running surveys, away from the hands-on work of improving campus education and responses to sexual misconduct. Externally-mandated annual or biannual surveys therefore could have the unintended consequence of cutting down or slowing an institution’s ability to create the culture change that these surveys are meant to promote.

Omnibus surveys not suited to program evaluation. Campus-wide prevalence rate surveys can inspire changes to campus programming, but these surveys are not effective tools to evaluate the resulting programs. As campuses devote resources to trying new approaches in sexual misconduct prevention and education, campus leaders need to know which initiatives are working and which are not. Large- scale surveys on their own will not provide campus leaders the information they need to deploy effective prevention and education strategies. If prevalence rate surveys must be run annually or biannually, campuses would likely need to devote resources to running those surveys that otherwise could have been used for program evaluation.

Practical considerations

Resource trade-offs. Time is one of the most limited resources for members of college and university campuses. Quite simply, prioritizing one activity requires the de-prioritization of other activities on our campuses. For example, student involvement in launching sexual assault and climate surveys is critical in that student leaders tend to be more effective than administrators or faculty in encouraging campus participation. However, that effectiveness is costly to the student leaders and to the university, as promoting a survey removes those students from valuable alternative campus activities.

Similarly, it is all too likely that the same administrators tasked with creating and running campus education and response to sexual misconduct are also deeply involved in survey activities. We’ve seen that successful comprehensive surveys require a coordinated “all hands on deck” approach, for example at institutions with the highest response rates to large-scale comparative omnibus surveys. The impact of this resource dedication is broad and difficult to measure given that there are many important issues surrounding campus sexual climate to be addressed, including specific areas of education and reporting that are mandated by state and federal offices.

Finally, the administration of high-quality prevalence rate surveys is expensive. A successful survey or other assessment project requires significant staffing and resource allocation. For example, monetary incentives for survey participation are often used to increase campus response rates and in turn, increase the reliability of the data. The cost of survey incentives typically ranges from $5 to $25 per student. An institution with 20,000 undergraduates might choose to survey a representative sample of 6,000 students, translating to a cost of $30,000 to $150,000. This single line item can be a major expenditure for an institution, yet does not preclude the need for additional resource allocation to ensure a project’s success. These surveys must be approached in a way that is realistic given a school’s financial resources. Such projects must also be designed to be sustainable over time if they are to change practice and programming on the ground, operating in tandem with an institution’s ongoing support for extensive statistical reporting to the internal and external entities charged with tracking campus sexual misconduct education and response. For example, increasing the frequency of large- scale prevalence rate surveys could lead to redundancies with existing campus data efforts such as annual Clery Reports.

Ethical considerations

Frequent administration increases risk of adverse impact on students. Some students have expressed how difficult it is to answer questions about sexual assault and sexual misconduct. Answering such questions can trigger emotional reactions that interfere with an individual’s academic and personal wellbeing. Although there is a clear need to collect these data, it is worth considering how to do so in a way that minimizes the potential for adverse impact on students.

During the data collection period of large-scale surveys, administrators on some campuses heard from students who felt the survey was upsetting, suggesting that surveys of this type should be administered less frequently. Administrators also heard from students who expressed a desire for more frequent opportunities to share their perspectives with leaders on their campuses. One solution may be for campuses to implement standing online survey forms available to students annually or year-round, as a supplemental data collection mechanism in the interim between campus-wide survey efforts that occur every 4-5 years. In this way, students who would like to share their experience could choose to do so, students who would prefer not to be asked to take a survey may choose not to seek out the survey forms, and administrators would have a method to seek fairly continuous campus feedback without the substantial resource burden of undertaking a full campus-wide prevalence rate survey.

Responsibility to use data collected from students. We believe that students’ trust in their institutions and in higher education administration is an important cultural value. Such trust is built up over time and our experience is that it is furthered when students see that survey results are taken seriously and utilized. Conversely, student trust is diminished when such data use is not apparent. It takes time to sufficiently analyze, discuss results, and make cogent use of those results in ways that are visible to our communities.

Maximizing the Value of Large-Scale, Comparative Surveys

We have proposed some methodological, practical, and other considerations around the timing of large-scale surveys. We now turn to the question of how to maximize the comparative power of the data gathered by these instruments. Establishing the ability to compare datasets from different institutions is difficult, but there is widespread agreement among researchers that meaningful benchmarks are crucial to addressing sexual assault and misconduct at our institutions. As outlined in the Report on the AAU Climate Survey on Sexual Assault and Sexual Misconduct:

Prior studies of campus sexual assault and misconduct have been implemented for a small number of IHEs [Institutions of Higher Education] or for a national sample of students with relatively small samples for any particular IHE. To date, comparisons across surveys have been problematic because of different methodologies and different definitions. The AAU study is one of the first to implement a uniform methodology across multiple IHEs and to produce statistically reliable estimates for each IHE.

The use of common methodologies and definitions allows for much clearer interpretation of the scope of sexual assault and misconduct. For example, the estimate that “1 in 4” or “1 in 5” female undergraduates have experienced sexual assault since entering college has been much discussed. To understand what the best estimate on a campus really is, and to understand whether prevalence rates change over time, consistent methods should be employed across iterations of a survey. Some critical survey features, such as the timeframe being measured and the sub-populations being compared, should be constructed the same way each time a large-scale survey is run.

This primary guideline regarding consistency of survey methods raises questions that institutions should discuss internally and with their peers in preparation for future campus prevalence rate surveys. For example, institutions should review the implications of a survey time-frame such as “since entering the institution” to determine whether it is valid to compare data between campuses that follow different academic calendars, or to compare prevalence rates that were gathered at different times of year (e.g., a survey run in early fall compared with a survey run in the late spring). Institutions might also find that they need to be able to customize substantial portions of a large-scale survey, in cases of questions that are most valuable because of their specificity to the campus rather than comparatively across campuses or over time (e.g., measuring student awareness about policies that exist at a single university). Although institutions might find value in collaborating on survey instruments, collaboration should occur solely at the discretion of the institution, to ensure that the entirety of a survey project is tuned to the specific needs and culture of the institution.

Benchmarking also benefits institutions by helping to build an audience for analysis. Being able to point to a meaningful comparison group can give great insight about data, but it can also help to foster campus interest in a school’s own results. At some schools, it was observed that campus stakeholders such as students, faculty, or other administrators, were particularly interested in data presentations that offered side-by-side comparisons between the home school and other similar institutions. Campus-wide interest helps generate campus-wide culture change.

Finally, harmonizing survey methods across campuses can benefit schools through the opportunity to pool data across campuses. This benefit is particularly relevant regarding data collected from smaller subgroups within our communities. For example, the AAU survey gave many schools their first window into the campus sexual climate experiences of students who do not identify on the gender binary. Because multiple schools administered the same questionnaire to students of all genders on their campuses, analysts were able to construct a meaningful aggregate data set, with enough data points to generate more statistically reliable prevalence rate estimates for students who identified outside the gender binary than any one school could have generated on its own.

Despite the potential benefits of comparison and collaboration, these goals should not take precedence over an institution’s ability to customize a survey to suit their community. Even among similar campuses, consideration should be given foremost to the unique needs of an individual institution.

Institutional Case Study, Spring 2016

New external mandates have given some institutions experience with annual prevalence rate surveys. For example, one private university in the Northeast recently completed its second of three annual iterations of the same prevalence rate survey of undergraduate and graduate students. Several of the concerns described above have affected the institution’s students and administrators as a direct result of repeating a survey on an annual schedule.

Recognizing the need to maintain a reasonably high response rate in the second cycle of their survey, the university pro- actively doubled their incentive budget and greatly augmented their communication plan with a very significant social media component that was broadcast on multiple platforms. While these efforts enabled the university to achieve a very respectable response rate of 47%, their response rate for year two was 5% below the result achieved in the first survey.

Some returning students, particularly those who feel they have no direct experience with sexual misconduct, have questioned the need to answer the same questions about their experiences with campus sexual misconduct that they answered last year. These concerns are echoed in the response rate, which lagged behind that of the first survey iteration despite the campus dedicating considerable additional resources to promoting the second survey.

Perhaps most worryingly, some students who have had personal experience with sexual misconduct have found the additional promotional materials and email reminders to be sources of emotional distress. These students acknowledged the importance of the survey—and were willing to deal with the stress of completing the first survey— but found the second administration one year later to be even more “highly stressful” and “anxiety-inducing” than the first administration.

Although the second survey provided an opportunity to follow-up on questions that emerged from the first survey, administrators found it challenging to design, test, and implement changes prior to fielding the second survey, and there is growing concern that rapidly repeated omnibus surveys may not be sustainable over the longer term.

Conclusion

The recent emphasis on using data to help campuses prevent and respond to sexual misconduct is an important part of any effort to foster a positive and respectful campus climate. Colleges and universities are increasingly motivated to administer large-scale surveys of campus sexual climate, but often neglect alternative means of campus climate assessment. We see large-scale, comprehensive prevalence rate surveys as powerful tools to employ toward those goals. However, these surveys have limits and should be employed as part of a broader effort to engage the campus community.

An omnibus survey can be a powerful opportunity for administrators to support their community and convey shared community values to a wide audience. But for the reasons outlined above, it is critical to consider the limitations and drawbacks of these surveys. Thorough review of the practicalities associated with large-scale prevalence rate surveys shows that these surveys may be administered every four years (i.e., once per undergraduate cohort) to gain maximum benefit from the data and avoid the drawbacks of overly frequent surveying.

Supporting a systematic “ecosystem” approach to campus data collection recognizes methodological limits and embraces the strengths of multiple types of data collection mechanisms. This approach is not a new idea or unique to the topic of campus sexual misconduct. It is consistent with a public health approach where large- scale surveys are typical tools among many others in a public health response to a complex issue. On a campus, as in other community settings, over-reliance on large-scale surveys risks a mismatch between the sheer amount of data collected and the quantity of directly useful analyses that can be translated into action.

To make the best use of increasingly limited resources in an area of critical campus action, we have suggested that institutions may be better served by aspiring to long-term coordination and synthesis of multiple data sources. Most of these data sources are tailored to the particular context of their institution and some allow for direct comparisons across institutions, without over-emphasizing any one data collection method. Through this approach, an institution can maximize its resources toward ongoing efforts to end campus sexual assault and sexual misconduct. Efforts based around mixed, institution-specific methods, together with peer benchmarking, are opportunities for colleges and universities to use all of the tools at their disposal toward evidence-based culture change.